Abstract¶

By the end of this material, readers will understand the intimate relationship between computers and programming, and appreciate the evolutions and motivations of programming languages. They will learn about basic computer architecture, binary data representation, and the structure of a Python program.

if not input('Load JupyterAI? [Y/n]').lower()=='n':

%reload_ext jupyter_aiLoad JupyterAI? [Y/n]

Computer¶

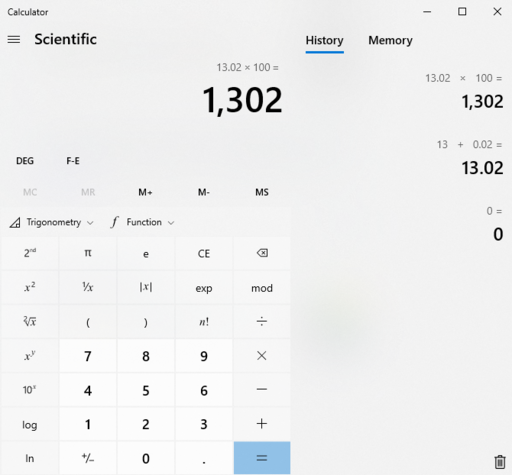

A calculator that is bigger and more advanced?

Figure 1:A calculator on a computer.

If so, is abacus the first computer ever invented?

Figure 2:Abacus - an ancient mechanical computing device.

Is your smartphone a computer?

What defines a computer?[1]

In addition to performing arithmetic calculations,

- a computer can be programmed

- to perform different tasks.

Who invented the first computer?

Run the following cell to ask AI how to defines a computer:[2]

%%ai

What defines a computer? Explain in one line.What is the architecture of a computer?¶

A computer contains three main hardware components:

- Input device

- Processing unit

- Output device

Peripherals¶

Figure 3:Computer peripherals.

Input and output devices connected to a computer are called peripherals.

They allow users to interact with the computer in different ways.

Solution to Exercise 1 #

- 3D printer available at CityU

%%ai

Summarize what an output device of a computer is and explain briefly why

a monitor and a speaker are examples?Solution to Exercise 2 #

- 3D scanner available at CityU

%%ai

Summarize what an input device of a computer is and explain briefly why

a keyboard and a mouse are examples?Solution to Exercise 3 #

- Solid-state dive or Hard disk

- CD/DVD Rom (writable)

- Touch screen

%%ai

Explain in one line why a headset is both an input device and an output device

at the same time.Central Processing Unit¶

The brain of a computer is its processor or the Central Procesisng Unit (CPU). As shown in Figure 4, it is located on the motherboard and connected to different peripherals using different connectors.

For instance, you can check the CPU of your Jupyter Server by running the following command:

!lscpuArchitecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 43 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 72

On-line CPU(s) list: 0-71

Vendor ID: GenuineIntel

Model name: Intel(R) Xeon(R) Gold 6248R CPU @ 3.00GHz

CPU family: 6

Model: 85

Thread(s) per core: 1

Core(s) per socket: 1

Socket(s): 72

Stepping: 0

BogoMIPS: 5985.93

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge m

ca cmov pat pse36 clflush mmx fxsr sse sse2 ss syscall

nx pdpe1gb rdtscp lm constant_tsc arch_perfmon nopl x

topology tsc_reliable nonstop_tsc cpuid tsc_known_freq

pni pclmulqdq ssse3 fma cx16 pcid sse4_1 sse4_2 x2api

c movbe popcnt tsc_deadline_timer aes xsave avx f16c r

drand hypervisor lahf_lm abm 3dnowprefetch pti ssbd ib

rs ibpb stibp fsgsbase tsc_adjust bmi1 avx2 smep bmi2

invpcid avx512f avx512dq rdseed adx smap clflushopt cl

wb avx512cd avx512bw avx512vl xsaveopt xsavec xsaves a

rat pku ospke md_clear flush_l1d arch_capabilities

Virtualization features:

Hypervisor vendor: VMware

Virtualization type: full

Caches (sum of all):

L1d: 2.3 MiB (72 instances)

L1i: 2.3 MiB (72 instances)

L2: 72 MiB (72 instances)

L3: 2.5 GiB (72 instances)

NUMA:

NUMA node(s): 1

NUMA node0 CPU(s): 0-71

Vulnerabilities:

Gather data sampling: Unknown: Dependent on hypervisor status

Itlb multihit: KVM: Mitigation: VMX unsupported

L1tf: Mitigation; PTE Inversion

Mds: Mitigation; Clear CPU buffers; SMT Host state unknown

Meltdown: Mitigation; PTI

Mmio stale data: Mitigation; Clear CPU buffers; SMT Host state unknown

Reg file data sampling: Not affected

Retbleed: Mitigation; IBRS

Spec rstack overflow: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prct

l

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointe

r sanitization

Spectre v2: Mitigation; IBRS; IBPB conditional; STIBP disabled; RS

B filling; PBRSB-eIBRS Not affected; BHI SW loop, KVM

SW loop

Srbds: Not affected

Tsx async abort: Not affected

Two important components in the CPU are:

- Arithmetic and Logic Unit (ALU): Performs arithmetics like a calculator but for binary numbers. (More on this later.)

- Control Unit (CU): Directs the operations of the processor in executing a program.

To visualize how CPU works, run the CPU Simulator below.

- Note that all values are zeros in the RAM (Random Acess Memory) initially.

- Under Settings, click

ExamplesAdd two numbers. Observe that the values in the RAM have changed. - Click

Runat the bottom right-hand corner.

Is there a rigorous definition of a computer?

Computer architecture may evolve over time, with new technologies and innovations emerging. However, modern computers follow the same mathematical principles of the universal machine defined by Alan Turing, Father of Modern Computing. The definition is made to address the problem of computing, i.e., what numbers are computable by programming a machine. Do you have an easy way to understand Turing’s proof?

%%ai

Explain very briefly how a universal machine look like.%%ai

How the problem of decidability has to do with definability and computability?Programming¶

What is programming?¶

What defines programming?

Programming, also called software development, is the process of writing programs for the computer to execute different tasks. But what is a program?

Solution to Exercise 4 #

The first six lines of binary sequences in the RAM. The last line Ends the program.

%%ai

Summarize what a machine language is and give an example of a program in

machine language.The CPU is capable of carrying out

- a set of instructions such as

Add,Subtract,Store, etc., - for some numbers stored in the RAM.

Both the instructions and the numbers are represented as binary sequences.

Why computer uses binary representation?¶

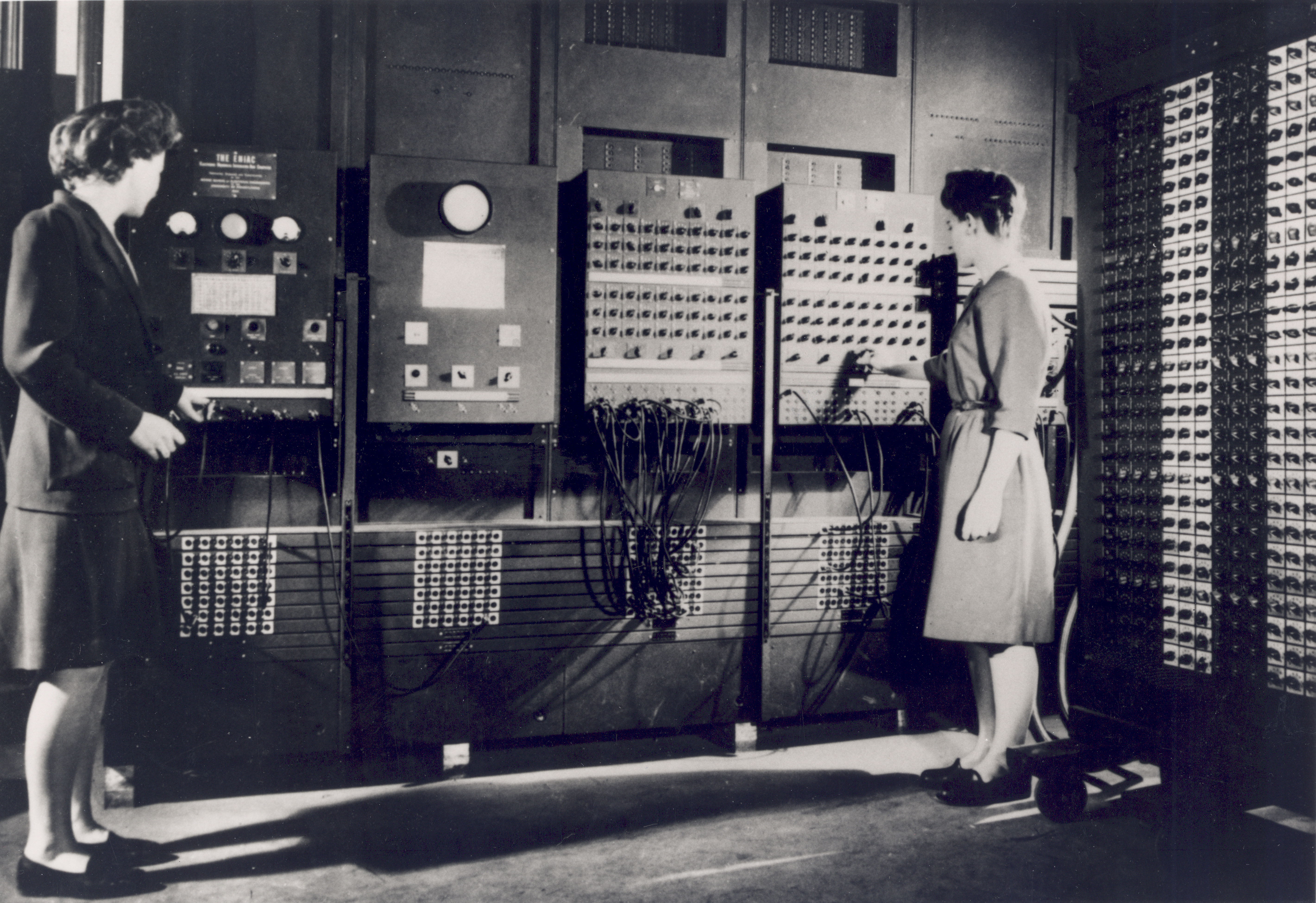

Figure 5:Programmers controlling the switches of ENIAC.

Notebook Cell

### BEGIN SOLUTION

2 ** 10 # because there are that many binary sequences of length 10.

### END SOLUTION1024%%ai

Explain the multiplication rule in combinatorics.Why use binary numbers?

Unlike ENIAC, the subsequent design of the Electronic Discrete Variable Computer (EDVAC) utilized the binary number system. The decision was explained in Section 5.1 of the design report authored by John von Neumann in 1945. While humans are accustomed to using the decimal number system, von Neumann justified the use of binary by noting that human neurons operate in a binary manner:

The analogs of human neurons ... are equally all-or-none elements. It will appear that they are quite useful for all preliminary, orienting, considerations of vacuum tube systems ... It is therefore satisfactory that here too the natural arithmetical system to handle is the binary one.

— John von Neumann

There are International Standards for representing characters:

There are also additional standards to represent numbers other than non-negative integers:

- 2’s complement format for negative integers (e.g. -123)

- IEEE floating point format for floating point numbers such as .

%%ai

Why define standards such as ASCII and Unicode?Why define different standards?

Different standards have different benefits.

- ASCII requires less storage for a character, but it represents less characters.

- Although digits are also represented in ASCII, the 2’s complement format is designed for more efficient arithmetic operations.

Generations of programming languages¶

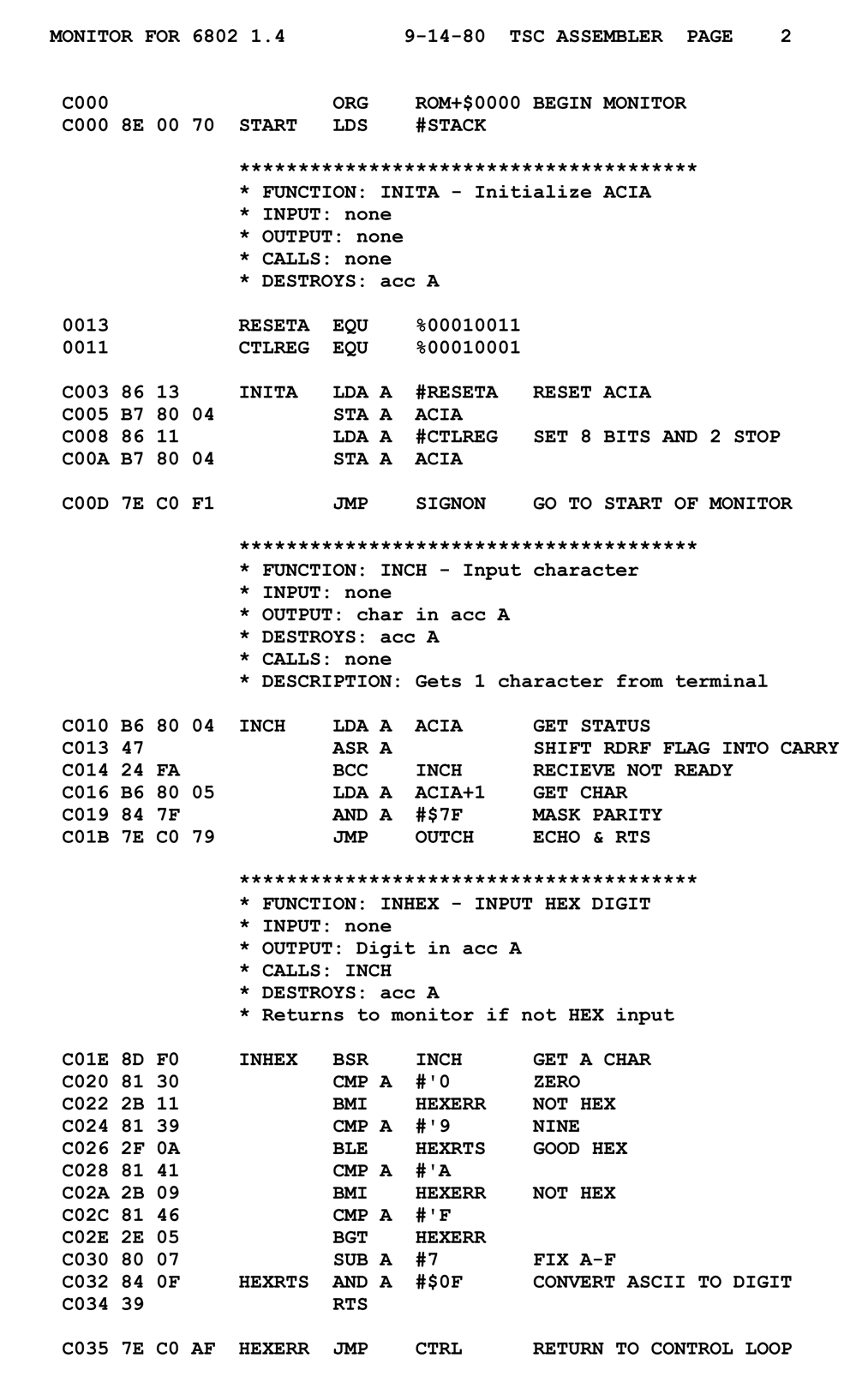

Are we going to start with machine language? Start with learning 2’s complement and the binary codes for different instructions?

No. Programmers do not write machine codes directly because it is too hard to think in binary representations.

Instead, programmers write human-readable mnemonics such as ADD, SUB, ...

This is called the assembly language. An example is shown in Figure 6.

Figure 6:A Code written in an assembly language.

%%ai

What is the assembly language most commonly used by programmers and what is its

main application?%%ai

Explain the different instructions used in the different instruction set

architectures including AMD, ARM, and RISC-V.Are you going to learn an assembly language?

Both machine language and assembly language are low-level languages which are

- difficult to write for complicated tasks (requiring many lines of code), and

- platform-specific, i.e.,

- the sets of instructions and their binary codes can be different for different types of CPUs, and

- different operating systems use different assembly languages/styles.

Should we learn assembly languages?

Probably for programmers who need to write fast or energy-efficient code such as

- a driver that controls a 3D graphics card, or

- a program that control a microprocessor with limited power supply.

But even in the above cases, there are often better alternatives. Play with the following microprocessor simulator:

- Open https://

micropython .org /unicorn/ in a browser. - Click

CHOOSE A DEMOLED. - Click

RUN SCRIPTand observes the LED of the board. - Run the demos

ASSEMBLYandMATHrespectively and compare their capabilities.

High-level Language¶

What is a high-level language?

The art of programming

Programmer nowadays write in human-readable languages such as

- Python

- Rust

- C/C++

- Java

- JavaScript

- Typescript

- Pascal

- Basic

- HTML

- PHP

- ...

called high-level languages.

- A program written in a high-level language gets converted automatically to a low-level machine code for the desired platform.

- This abstracts away low-level details that can be handled by the computer automatically.

For instance, a programmer needs not care about where a value should be physically stored if the computer can find a free location automatically to store the value.

Different high-level languages can have different implementations of the conversion processes:

- Compilation means converting a program well before executing the program.

E.g., C++ and Java programs are compiled. - Interpretation means converting a program on-the-fly during the execution of a program.

E.g., JavaScript and Python programs are often interpreted.

Compiled vs interpreted languages

Roughly speaking, compiled programs run faster but interpreted programs are more flexible and can be modified at run time. The truth is indeed more complicated. Python also has a compiler, and recently, a JIT compiler. Is Python really an interpreted language?

%%ai

Explain in one or two paragraphs whether Python is really an interpreted

language, given that it also has a compiler and, recently, a JIT compiler, and

it can be run as a script like a scripting language.What programming language will you learn?

You will learn to program in Python, which is currently the most popular language according to the TIOBE (The Importance of Being Efficient) Programming Community Index.

We will cover

- basic topics including values, variables, conditional, iterations, functions, composite data types,

- advanced topics that touch on functional and object-oriented programming, and

- engineering topics such as numerical methods, optimizations, and machine learning.

Why Python?

Python is:

- expressive and can get things done with fewer lines of code as compared to other languages.

- popular. It has an extensive set of libraries for Mathematics, graphics, AI, Machine Learning, etc.

- free and open-source, so you get to see everything and use it without restrictions.

- portable, i.e., the same code runs in different platforms without modifications.

How does a Python program look like?

import datetime # library to obtain current year

cohort = input("In which year did you join CityU? [e.g., 2020]")

year = datetime.datetime.now().year - int(cohort) + 1

print("So you are a year", year, "student.")In which year did you join CityU? [e.g., 2020] 2025

So you are a year 1 student.

A Python program contains statements just like sentences in natural languages. E.g.,

cohort = input("In which year did you join CityU? ")which obtains some information from user input.

For the purpose of computations, a statement often contains expressions that evaluate to certain values. E.g.,

input("In which year did you join CityU? ")is an expression with the value equal to what you input to the prompt.

That value is then given the name cohort.

Expressions can be composed of the following objects:

- Functions such as

input,now, andint, etc., which are like math functions the return some values based on its arguments, if any. - Literals such as the string

"In which year did you join CityU? "and the integer1. They are values you type out literally. - Variables such as

cohortandyear, which are meaningful names to values.

To help others understand the code, there are also comments that start with #.

These are meant to explain the code to human but not to be executed by the computer.

Solution to Exercise 6

Programming using natural language. A step towards this direction: Vibe coding.

Write programs that people enjoy reading, like literate programming. A step towards this direction: nbdev.

Literate programming

%%ai

What do you think the next generation programming should be?Click me to see an answer.

You can run a cell by selecting the cell and press Shift + Enter.

To edit a markdown cell, double click the cell until you see a cursor. Run the cell to render the markdown code.

The CPU simulator follows the von Neumann architecture, which realizes Turing’s conception of the stored-program computer, where program and data are stored in the same memory. This is in contrast to the Harvard architecture, which uses separate memories for program and data.

- Turing, A. M. (1937). On Computable Numbers, with an Application to the Entscheidungsproblem. Proceedings of the London Mathematical Society, s2-42(1), 230–265. 10.1112/plms/s2-42.1.230

- von Neumann, J. (1993). First draft of a report on the EDVAC. IEEE Annals of the History of Computing, 15(4), 27–75. 10.1109/85.238389